What every Software Tester needs to know about his job.

Undoubtedly, software testing is a job, yet it is also a kind of “art”. It needs to be remembered while testing (any) software, whether you test a calculator or perform security tests of the most complex data communications systems. Both systems will obviously be tested in different ways, but remember that the aim of your work is always the same: you should always do it with utmost dedication and know the art of the trade.

Is Software Testing Tutorial right for you?

If you are:

• interested in problems of software testing …

• ready to start your adventure with software testing …

• willing to learn about software testing in an easy and effortless way …

• willing to learn the basics of test automation …

• ready to learn a few basic terms related to software testing …

• planning to attend a job interview or to take an important exam …

• or you simply wish to refresh the knowledge you already have …

…this tutorial is right for you!

In this article you’ll find here all the basic information on the art of software testing. The knowledge contained in it is indispensable for every software tester who treats testing not as fun, but as work.

The basics of software testing

Why is software testing important?

A seemingly banal question, yet the answer to is not so easy to find. We should be aware of one thing at the very start: testing itself does not raise the quality of software or documentation. A malfunctioning system may result in a loss of money, time and business reputation; it may also delay the publication of local government results, or even lead to a loss of health or life.

When testing detects defects, the quality of the system rises once they have been removed. Testing allows for proving software quality which is measured by the quantity of discovered defects, both for the functional and the non-functional requirements and attributes of the software. Testing helps to build trust in the quality of the software that is created by people working in a team (if the system testers find a few defects or even none).

The most important objectives of testing:

• discovering defects,

• building trust in the quality level,

• providing the information that is required for decision making,

• preventing defects.

Software testing is the execution of the program in order to discover the errors it may contain.

What is testing and how may test are needed?

Software testing is the execution of the code for the combination of input data and status in order to detect errors. It is a process (a series of processes) designed to make sure that the result of a given code execution fully complies with the design objectives and that the execution does not produce results that do not correspond to the objectives.

Another thing we have to remember about is the fact that we are unable to test all the possible input/output data of the program. In an ideal world we would like to test all the possible kinds of input data, but the number of potential test cases may amount to hundreds, thousands, and in large systems even millions, which goes beyond the scope of human capabilities. The number of possible paths that lead through the program is immense, often practically countless.

It is impossible to state how much time is needed for testing. It is a very individual case which mostly depends on the time and the budget allowed. Testing should provide information that is sufficient for taking rational decisions about whether or not to allow for the system to go to another development stage or to give it to the customer.

What is an error?

• The software does not do something it should do according to its specification.

• The software does something it should not do according to its specification.

• The software does something that is not covered in its specification at all.

• The software does not do something that the specification does not cover, but it should.

• The software is difficult to understand, difficult to use, slow or (in the tester’s opinion) will be simply faulty in the eyes of the user.

Basic rules for software testing.

• Testing shows presence of defects

Testing may show defects, but it cannot prove that the software contains no defects.

• Exhaustive testing is impossible

It is an approach to testing in which the test sample contains all combinations of input data and preconditions: it is possible only in isolated cases.

• Testing should be started as early as possible

Testing activities should start in the software life cycle as early as possible. It may even start before the software is made. What is more, software testing may even precede software documentation. The very idea of the software may be subject to testing, as well as the possibility of its implementation.

• Error accumulation

The labour intensity of testing is shared in proportion to the anticipated and detected error density in the components. A small number of components usually contains the majority of defects detected before the release or is responsible for most crashes at production.

• The pesticide paradox

We experience it when the test set fails to detect ant defects. The solution to this problem is a proper management of test cases. Test cases should continuously be verified, modified and updated.

• Testing is context-dependent

As it was stated above, the way in which a program is tested depends on its dedication and complexity. Critical security software will be tested differently than online shopping software.

• Absence-of-errors fallacy

The detection and elimination of errors will not help if the system is useless and fails to meet the customer’s and users’ demands.

The software testing process

According to The Certified Tester – Foundation Level Syllabus (ISTQB®) the basic testing process consists of the following:

Test planning and control

Test planning:

– verifying the test mission,

– defining the test strategy,

– defining the test objectives,

– defining the test activities that are aimed at meeting the objectives and fulfilling the test mission.

Test control:

– comparing actual progress against the plan,

– reporting the status (especially the deviations from the plan),

– taking actions necessary to meet the objectives and fulfil the mission of testing,

– continuous activity in the project,

– in order to control testing, the testing activities should be monitored throughout the project.

Test analysis and design

The test analysis and design has the following major tasks:

– reviewing the test basis (requirements, software integrity level, risk analysis reports, architecture, design, interface specifications),

– evaluating testability of the test basis and test objects,

– identifying and prioritizing test conditions based on the analysis of test items, the specification, behaviour and structure of the software,

– designing and prioritizing high level test cases,

– identifying necessary test data to support the test conditions and test cases,

– designing the test environment setup and identifying any required infrastructure and tools,

– creating bi-directional traceability between test basis and test cases.

Test implementation and execution

Test implementation and execution has the following major tasks:

– finalizing, implementing and prioritizing test cases (including the identification of test data),

– developing and prioritizing test procedures, creating test data and, optionally, preparing test harness and writing automated test scripts,

– creating test suites from the test procedures for efficient test execution,

– verifying that the test environment has been set up correctly,

– verifying and updating bi-directional traceability between the test basis and test cases,

– executing test procedures either manually or by using test execution tools, according to the planned sequence,

– logging the outcome of test execution and recording the identities and versions of the software under test, test tools and test ware,

– comparing actual results with expected results,

– reporting discrepancies as incidents and analysing them in order to establish their cause (a defect in the code, in specified test data, in the test document, or a mistake in the way the test was executed),

– repeating test activities as a result of action taken for each discrepancy.

Evaluating exit criteria and reporting

Evaluating exit criteria has the following major tasks:

– checking test logs against the exit criteria specified in test planning,

– assessing if more tests are needed or if the exit criteria specified should be changed,

– writing a test summary for stakeholders.

Test closure activities

Test closure activities include the following major tasks:

– checking which planned deliverables have been delivered

– closing incident reports or raising change records for any that remain open

– documenting the acceptance of the system

– finalizing and archiving testware, the test environment and the test infrastructure for later reuse

– handing over the testware to the maintenance organization

– analyzing lessons learned to determine changes needed for future releases and projects

– using the information gathered to improve test maturity.

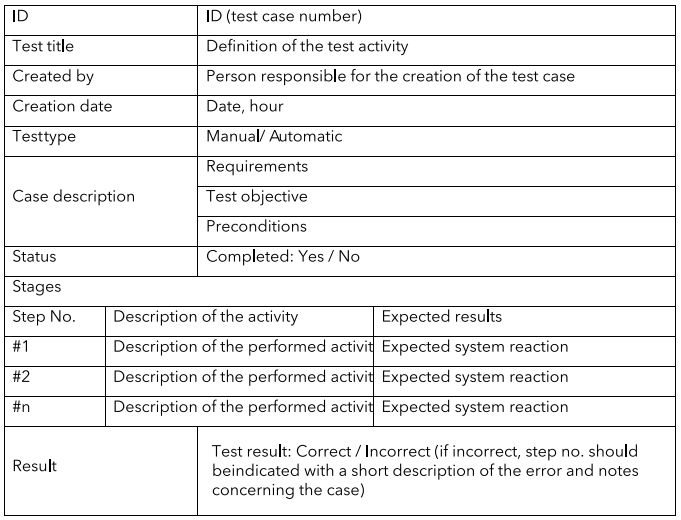

Good software test case

A test case is a set of input values, execution preconditions, expected results and execution postconditions, defined to cover a certain objective or test condition, such as a certain test path execution or checking the compliance with the specification.

Expected results should be produced as part of the specification of a test case and include outputs, changes to data and states, and any other consequences of the test.

If expected results have not been defined, then a plausible, but erroneous, result may be interpreted as the correct one. Expected results should ideally be defined prior to test execution. Software testing cannot guarantee that the program is free of errors. Therefore, every testing, by definition, is incomplete, so test cases must be constructed in such a way as to minimise the results of test incompleteness.

In conclusion: the set of test cases used in testing of a particular program should be as complete as possible.

Every test case should include the following:

• ID – should clearly indicate where the given group of test cases belongs,

• Test title – should indicate what we want to achieve after a given case has been conducted,

• Reference – a given functionality, class, method, etc.,

• Created by – the data, e-mail or login of the person who created a given test case,

• Creation date – the date when a given test case was created, which helps build testing history,

• Type – whether the test should be executed manually or automatically,

• Description – a detailed description of the test case objective which should contain the following information:

1) Requirements – what requirements are related to a given test case,

2) Objective – a description of test execution, together with the details of what the test is about,

3) Preconditions – what conditions must be met in order to start test execution, e.g. what authorizations or previously collected data are needed,

• Status – informs whether the test has already been executed or not,

• Stages – step-by-step instruction on how the test should be executed. It consists of the following:

1) Step – number of the step taken,

2) Description – a detailed description of the activities at a given point,

3) Expectations – what result is expected after the activities at the given point have been performed,

• Result – field for test results and optional comments.

It is important to be precise while describing test cases, so that they do not require specialist knowledge to perform. It should be borne in mind that not every prospective system user will be an expert in the given field.

The cases should also be properly commented on in order to make it possible to introduce corrections without wondering what a given part of the code does. While the tests are implemented, test cases are developed, implemented, prioritized and combined into test procedure specifications.

Test case basic template

How to report a detected error?

One of the most frequently made mistakes (both by beginners and more advanced testers) is that the reported errors lack sufficient description. When you report a detected defect, you need to remember that the created error report is a carrier of information in which you inform the programmer about the differences between the expected result and the current status. The primary objective of an error report is to let the programmer notice and understand the problem.

The report should also include all other necessary information on how to generate the error again. The detected error should be described in a way that is unambiguous, concise, precise and detailed, so that the programmer who reads the report does not have to guess what the author meant. The programmer should be able to generate the described error as quickly as possible, without the need to search throughout the whole service.

What to do when an error appears?

First, do not panic. This is the worst you can do. We should never lose our minds and involuntarily close the windows that inform us about an error – this is what a software tester must never do. First of all, you should read the error message and make a print screen (you will surely need it). Even if the message is incomprehensible to you, it contains a sequence of strange, seemingly random characters, it may allow the programmer to find a defect in the code.

Another thing every software tester should get into the habit of doing is checking whether a given error is repeatable. This information is very important for the programmer to know whether an error is intermittent or it can be generated multiple times. On detecting an error we should always check if, for example, other input values will evoke the error (maybe the problem appears only after a long text has been typed in or the duration of the session is the issue).

In most cases, you will not know the exact reason for the appearance of the defect when you detect it. You are not likely to give the programmer a ready solution to the problem, but you can help by providing a description of your guesses. Yet, it is important not to use such phrases as “It seems to me that…”, “The problem may be caused by…” or “It may be that…”.

How to report?

Title

A good title is concise and describes the problem in a couple of words. Yet, you should avoid phrases that are too general, such as “The menu does not work”. A much better version would be “The items on the menu are unclickable”.

Error location

Here we type the URL address or a path that points to the location where the error appeared, together with the location on the page.

Environment

In the case of applications and websites it is necessary to supply the information concerning the version of the browser and the operating system. Similarly, while reporting mobile device errors, it is necessary to supply device name, operating system version and web browser version.

Replication path

Programmers like detail and the replication path is often indispensable in order to fix an error. Unfortunately, we have to be aware of the fact that the developer may reject a report if they do not know how to generate the described bug. There are many ways in which the error replication may be described, yet we should remember about one rule: the simpler, the better. Undoubtedly, long, complex sentences should be avoided.

Additional information

Here you can type your guesses and observations. It is always worth noting whether the error is repeatable or intermittent. It is also good practice to state your expectations concerning the desired result. If you feel you can do it, you may also add your guesses about the reasons why the error appears.

It is also very important how you submit your notes and observations. No matter what error you detected, remember that the description relates to the situation, not the person who coded a given functionality. While reporting an error, never criticize the programmer, since the error report is not appropriate for it. Tensions between testers and analysts, designers and programmers may be avoided by communicating errors, defects and crashes in a way that is constructive.

Test design techniques

The objective of test design techniques is to define the test conditions, test cases and test data. The least effective way of test cases design is a random selection from all the possible cases in a given situation. This way is the worst for the probability of error detection. A random set of test cases is very unlikely to be an optimal or semi-optimal set. A traditional division is into the black-box and the white-box methods.

Black-box techniques

Black-box testing (testing based on specification) is the treatment of the tested program as a kind of specific box whose internal structure remains unknown. While testing with the use of the black-box method only the functional aspect is important, i.e. the results achieved for concrete input values.

The testing objective is to define the conditions in which the result fails to comply with the specification(no information of the internal structure of the tested system or components is used for testing).

Specification-based software testing methods:

• Division into equivalence classes,

• Boundary value analysis,

• Decision table testing,

• State transition testing,

• Testing based on test cases.

The advantages of black-box testing:

• Tests are repeatable,

• The environment in which testing is performed gets tested,

• The effort involved may be reused multiple times.

The disadvantages of black-box testing:

• Test results may be estimated too optimistically,

• Not all the properties of the system may be tested,

• The cause of the error is unknown.

White-box techniques

White-box testing (structure-based testing techniques) is based on the internal program structure and makes it the foundation for the design of test cases. The objective of structural tests is the analysis of the coverage, i.e. the extent to which the structure (a selected structure type) has been tested by the prepared set of tests.

Structure-based software testing techniques:

• Testing and instruction coverage,

• Testing and decision coverage,

• Testing and condition coverage,

• Testing and multiple decision coverage.

The advantages of white-box testing:

• since the knowledge of the code structure is required, it is easy to define what set of input/output values is needed to test the application effectively

• apart from the main purpose, testing also helps optimize application code,

• allows to precisely define the cause and location of the error.

The disadvantages of white-box testing:

• since the knowledge of the code structure is required, a tester who knows programming is needed in order to run the tests, which increases the costs,

• it is almost impossible to check every single line of the code in search of hidden errors, which may result in errors after the testing stage.

Experience-based testing

Experience-based tests rely on the tester’s skills and intuition, together with their experience in testing similar applications or technologies. These techniques are efficient in error detection, but not as suitable for testing coverage or creating reusable test procedures as other techniques. Testers tend to rely on their experience while testing. With this approach they react more to events rather than planned approaches while testing.

Experience-based software testing techniques:

• Error guessing,

• Exploratory testing,

• Fault attack.

In defect-based and experience-based techniques the knowledge of defects and other experiences are used in order to increase the performance in defect detection. They comprise a broad range of activities, from “quick tests”, where the tester’s activities are not pre-planned, through planned sessions with scripts.

They are almost always useful, yet they are particularly useful in the following circumstances:

• the specification is missing,

• the tested system is insufficiently documented,

• there is not enough time to design and create test procedures,

• the testers have experience in the tested field or technology,

• variety is desired, in contrast to testing with scripts,

• while analysing a breakdown at production.

Software test levels

Software testing is a process divided into levels. Each of the test levels corresponds to a subsequent stage of software design. For each of the test levels, the following can be identified: the generic objectives, the work product(s) being referenced for deriving test cases (i.e., the test basis), the test object (i.e., what is being tested), typical defects and failures to be found, test harness requirements and tool support, and specific approaches and responsibilities.

Module (component) tests

Typical test objects:

• Modules,

• Programs,

• Data conversion / migration programs,

• Database modules,

• Objects.

Main test features:

• Module testing searches for defects in and verifies the functioning of the software that is separately testable,

• Component testing may include testing of functionality and specific non- functional characteristics, such as resource-behaviour (e.g. searching for memory leaks) or testing the resistance to an attack,

• Test cases are derived from work products such as a specification of the component, the software design or the data model,

• Defects are typically fixed as soon as they are found, without formally managing these defects.

Integration testing

Typical test objects:

• Subsystem database implementation,

• Infrastructure and Interfaces,

• System configuration and configuration data,

• Subsystems.

Main test features:

• Integration testing tests interfaces between components, interactions with different parts of a system, such as the operating system, file system and hardware, and interfaces between systems

• The greater the scope of integration, the more difficult it becomes to isolate defects to a specific component or system, which may lead to increased risk and additional time for troubleshooting.

• Testing of specific non-functional characteristics (e.g. performance) may be included in integration testing as well as functional testing.

• At each stage of integration, testers concentrate solely on the integration itself. For example, if they are integrating module A with module B they are interested in testing the communication between the modules, not the functionality of the individual module as that was done during module testing.

• Ideally, testers should understand the architecture and influence integration planning.

System testing

Typical test objects:

• System, user and operation manuals

• System configurations and configuration data.

Main test features:

• System testing is concerned with the behaviour of a whole system/product.

• The testing scope shall be clearly addressed in the Master and/or Level Test Plan for that test level.

• System testing should investigate functional and non-functional requirements of the system

• The test environment should correspond to the final target or production environment as much as possible in order to minimize the risk of environment- specific failures.

Acceptance testing

Typical test objects:

• Business processes on fully integrated system,

• Operational and maintenance processes,

• User work procedures,

• Forms and Reports,

• Configuration data.

Main test features:

• Customers or users of a system are often responsible for acceptance testing.

• The objective in acceptance testing is to establish confidence in the system, parts of the system or specific non-functional characteristics of the system.

• Acceptance testing may assess the system’s readiness for deployment and use, although it is not necessarily the final level of testing.

• Acceptance testing may occur at various times in the life cycle.

Typical forms of acceptance testing include the following:

• User acceptance – verifies the compliance of the system with users’ needs,

• Operational – the acceptance of the system by the system administrators, including: the testing of backup and its ability to restore the functionality after problems occur, etc.,

• Contract and regulation – testing the criteria for producing custom-developed software. Acceptance criteria should be defined when the parties agree to the contract,

• Alpha and beta testing – the people who create software for customers who buy it from a retail store shelf expect some feedback from them before the product is launched on the market. Alpha testing is performed at the developing organization’s site. Beta testing is performed by customers. Both testing types are performed by potential recipients of the product.

Test types

Functional testing

Functional testing objective:

Functional testing aims at a detection of a discrepancy between a program and its external specification which precisely describes its behaviour from the user’s viewpoint or is based on the knowledge and experience of the testing team. The objective of functional testing is not to prove the compliance of the program with its external specification, but to detect as many incompatibilities as possible.

Main test features:

• Functions are ”what” the system does.

• Specification-based techniques may be used to derive test conditions and test cases from the functionality of the software or system.

• Functional testing is often based on black-box testing.

Non-functional testing

Non-functional testing objective:

The objective of on-functional testing is to obtain the information or measurement about the features of a system/application/module.

Main test features:

• Non-functional testing checks “how” the system works.

• Non-functional testing may be performed at all test levels.

• Non-functional testing considers the external behaviour of the software and in most cases uses black-box test design techniques to accomplish that.

• These are the tests required to measure characteristics of systems and software that can be quantified on a varying scale (e.g. response times for performance testing).

Non-functional tests classification:

• performance testing

• load testing

• stress testing

• usability testing

• maintainability testing

• reliability testing

• portability testing

• security testing

Structural testing

Structural testing objectives:

Structural techniques are used in order to help measure the thoroughness of testing through assessment of coverage of a structure (a selected type of structure). Coverage isthe extent that a structure has been exercised by a test suite, expressed as a percentage of the items being covered.

Main test features:

• Structural testing is white-box testing.

• Structural testing may be performed at all test levels.

• Structural techniques are best used after specification-based techniques, in order to help measure the thoroughness of testing through assessment of coverage of a type of structure.

• At all test levels, but especially in component testing and component integration testing, tools can be used.

• Structural testing may be used on the architecture of the system.

Confirmation and regression testing

A lot of people (including professional testers) often confuse the two terms and use them interchangeably, as if they meant the same. It is, of course, a serious mistake and every tester should have the basic knowledge about these two test types.

Regression testing objective:

Regression testing is aimed at verifying the software after the modifications introduced and the confirmation of both software and testing environment readiness for further test activities, transition to another iteration of the test project, etc.

Confirmation testing objective:

Confirmation testing is performer in order to confirm that the detected defect was fixed by the programmers.

Main test features:

• The tests that are used in confirmation testing and regression testing must be repeatable.

• Regression testing is performed when the software, or its environment, is changed.

• The scope of regression testing depends on the risk of failure to detect any defects in the software that previously worked correctly.

• Regression testing may be performed at all test levels, and includes functional, non-functionaland structural testing.

Maintenance testing

Maintenance testing objective:

Maintenance testing is done on an existing operational system, and is triggered by modifications, migration, or retirement of the software or system. In addition to testing what has been changed, maintenance testing includes regression testing to parts of the system that have not been changed.

Main test features:

• Modifications may include planned enhancements, updates or hotfixes or environment changes such as a planned upgrade of the operating system version, databases or off-the- shelf software, as well as operating system security patches.

• Maintenance testing for software migration (e.g. from one platform to another) should also include, apart from testing the changes in the software, production tests of the new environment.

• Maintenance testing for the retirement of a system may include the testing of data migration or archiving if long data-retention periods are required.

• In addition to testing what has been changed, maintenance testing includes regression testing to parts of the system that have not been changed.

Tool support for testing

Test tools classification

There are many tools to support different aspects of testing. Some tools clearly support one activity; others may support more than one activity, but are classified under the activity with which they are most closely associated. Some commercial tools support only one type of activity, yet some commercial tool providers offer tools bundled into one package, which supports many different test activities.

Major types of tool support for testing

• Test and report management tools

These tools provide interfaces for executing tests, tracking defects and managing requirements, along with support for quantitative analysis and reporting of the test objects. They also support tracing the test objects to requirement specifications and might have an independent version control capability or an interface to an external one.

• Automation tools

These tools allow for automation of the activities that would require a large input of work if they were performed manually. Test automation tools help increase the performance of testing activities by the automation of repeatable tasks or support for test activities performed manually, such as test planning, test design, test reporting and monitoring. Automation is an especially good solution for long-term projects. The automation of most regression tests saves time. Tests last a shorter time and in the long term the cost involved in automation is quickly recovered.

• Incident management tools

These are the tools that help register incidents and track their status. They often offer the functions of workflow tracking and control in relations to allocation, repair and resets. They also offer the possibility to report.

• Test execution tools

Test execution tools execute test objects using automated test scripts which are designed in such a way as to implement the electronically stored tests. Capturing tests by recording the actions of a manual tester seems attractive, but this approach does not scale to large numbers of automated test scripts. A captured script is a linear representation with specific data and actions as part of each script. This type of script may be unstable when unexpected events occur.

• The incident management system should provide the following:

a) flexible tools that reduce the multitude of events presented to the administrator,

b) scalable graphics interface that presents scalable information to appropriate persons,

c) reporting methods (pager, e-mail, phone) to inform particular technical assistance management groups and users about the problems that have appeared,

d) tools for events correlation that minimize the time needed to locate and isolate the damage, without the need to involve experts,

e) tools that support a quick assessment of how the damage affects business (services, customers).

• Tools to support performance tests

Tools to support performance testing usually have two functionalities: load generation and transaction measurement. The generated load may simulate both many users and a large amount of input values. While performing tests measurements are logged only from selected transactions. Tools for performance tests usually provide reports based on the logged transactions and load graphs in relation to response times.

• Tools to prepare test data

It is a tool that allows for selecting data from an existing database or to create, generate, process and edit the data to be later used in testing.

Briefly about automated tests

The types of automated tests:

• Module tests (created and performed by programmers for programmers) – testing of single program modules,

• Load tests (created and performed by testers) – performed in order to determine the performance of the software,

• Functional tests (created and performed by testers) – testing based on specification analysis of system functionality or its component.

What is suitable for automation and what is not?

An application that is stable is a very good candidate for automation (the cost of maintaining automated tests is low in this case). Every application that is to be automated should have a documentation according to which tests will be created. Should the documentation contain errors or be incomplete, the design of the automated tests will also be erroneous. Automation should especially concern these areas of the application which are most frequently used.

Test automation will shorten the testing time of these areas and such an automated test will relieve the tester of yet another analysis of the same path. Automated tests are also useful because of the amount of data needed for testing. A set of test data required for application testing may be prepared in a simple way. Automated test may become a considerable problem if a fairly complex application is to be subject to automation. A great number of possible cases may make it difficult to anticipate and automate all cases in such a way as to achieve a stable test.

It is better to refrain from the automation of the applications that are often modified. It is directly related to the costly maintenance of such tests, which will require continuous work to keep them work in compliance with the requirements.

Advantages and disadvantages of automated tests

Advantages of automated tests:

• The cost of error detection is lower – module tests,

• It is possible to use a large quantity of test data – performance tests,

• Test analysis – on test completion the program provides information on test progress,

• It is possible to reproduce an identical test – it is particularly useful when we want to check whether the fixes introduced did solve the problem,

• A considerable acceleration of testing speed – testing programs perform tests much more quickly than testers.

Disadvantages of automated tests:

• The cost of purchasing the automation tools (free tools are also available),

• The cost of script execution, development and maintenance and the cost of training,

• An automated test is incapable of substituting a human. It will not analyse the logs, classify the defects and errors, or report and describe the errors to be fixed.

SELENIUM – the free test automation tool

Selenium is primarily a tool for the automation of web applications. The tool helps testers perform functional tests and regression tests. The tool supports all popular platforms: Windows, Linux and OS. The framework consists of the following components: IDE, RC, WebDriver, GRID.

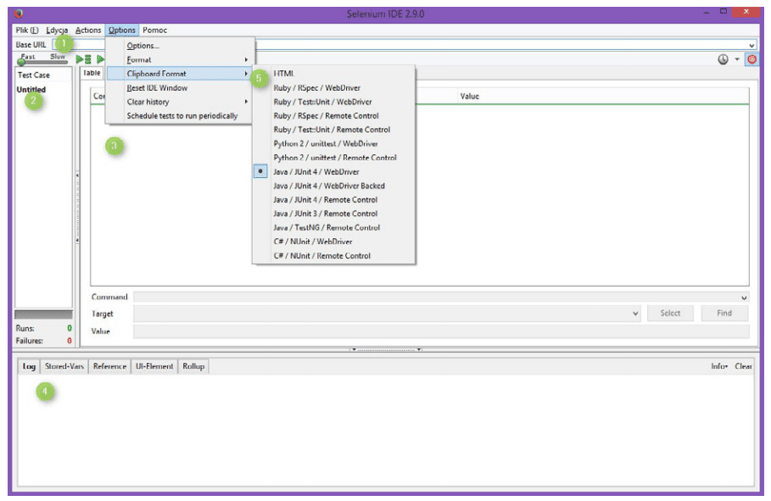

Selenium IDE

Selenium IDE is a Firefox plugin that allows to record and playback tests. It is possible to write simple scripts in the internal script language of the plugin. The tool is suitable for small projects. The knowledge of the programming language is not required for the use of this tool, which makes it a perfect tool for beginner testers who take their first steps in test automation.

Selenium IDE advantages:

• Simple installation and Easy to use,

• The knowledge of the programming language is not required

• It is possible to export tests into a particular programming language,

• A large number of additional, useful plugins.

Selenium IDE disadvantages:

• Designed for rather simple tests,

• Available only as a Firefox plugin,

• Rather low testing speed.

1. The Base URL bar is where you type in the base address of the page we want to test. It may be any web page, e.g. www.divante.co. Below is the toolbar we can use to set the speed of our tests: fast or slow. It is a useful option when we want to check whether after it is recorded the test is performed correctly. Next to the testing speed slider there are control buttons which execute the recorded tests or a selected test group or stop the execution of tests at any time. The Enter-shaped icon allows us to execute a test step-by-step (debugger). The violet swirl-shaped icon groups any number of commands into one, similarly to the methods which contain different types of code sequences and may be invoked with the use of a single name. In the upper right corner, under the Base URL bar, there is a red dot which starts and stops the recording of the individual steps of the test.

2. The Test Case section contains the test cases that we recorded. It allows us to precisely document and control all the changes that we introduce. Each of the cases may have a different name. At the bottom there is a counter of the tests that we created with the use of Selenium IDE, including the tests that failed.

3. The Table field. While recording a test Selenium will generate the test code on its own and will also make a list of the commands required to perform the test. Here we will also be able to manually declare individual commands that are to be executed automatically by IDE.

4. The log section. This section contains a few tabs which are mostly used for error reporting, syntax suggestions for created commands, etc.

5. Language selection. In this section we can choose the programming language in which we wish to generate our test. To make it possible it is necessary to select “Enable experimental features” in the Options tab. In the Format tab it is possible to choose a language our script will be generated in. The default choice is to generate tests in HTML.

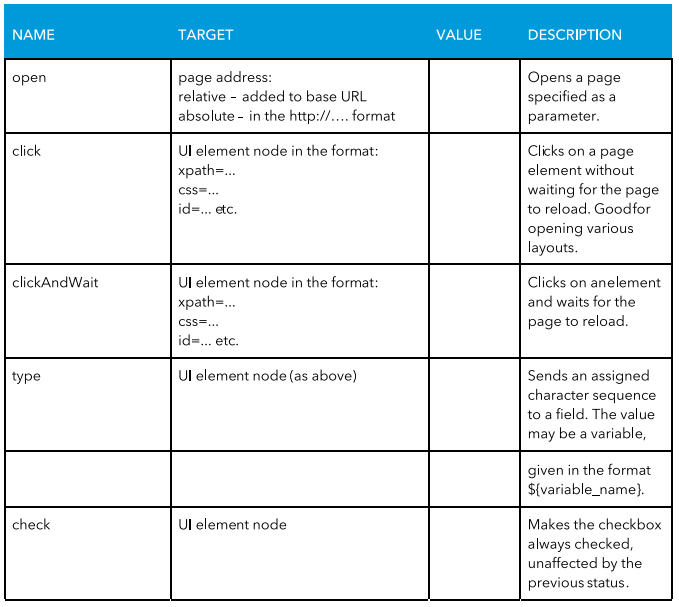

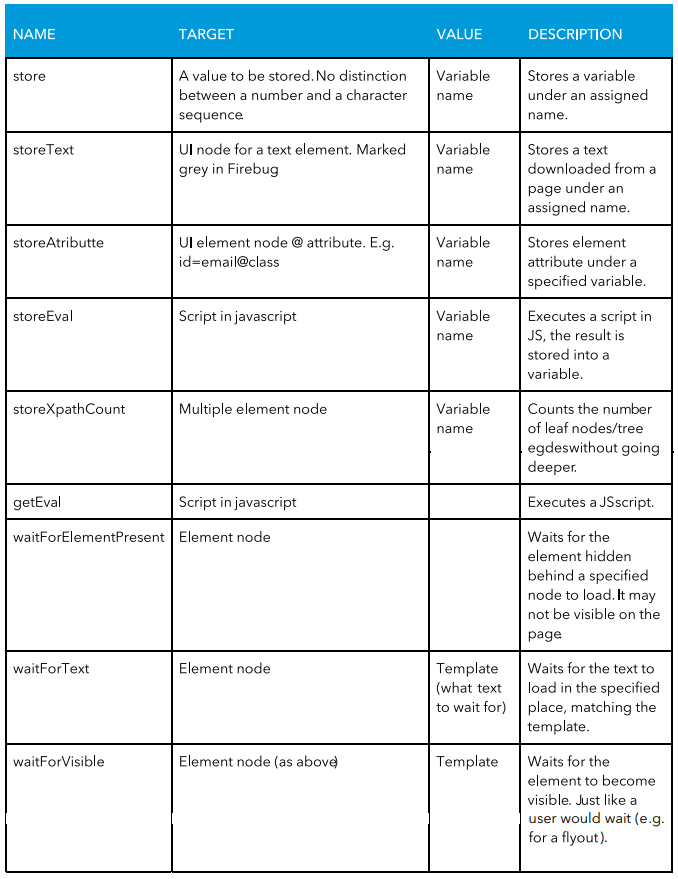

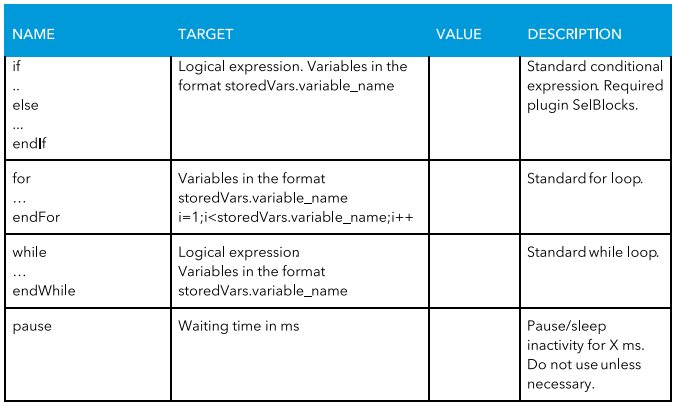

Most often used commands in Selenium IDE:

Selenium RC

Selenium Remote Control (aka Selenium 1) supports writing and executing tests with the use of any browser. Selenium RC consists of two components. One is Selenium Server, on which all previously prepared tests are run. The other component is made up of customer libraries which offer an interface between a programming language and Selenium RC Server services. Selenium RC has been the main framework in the Selenium family for a long time. It supports the following programming languages: Java, C#, PHP, Python, Perl, Ruby.

Selenium RC advantages:

• Support for various browsers,

• It is possible to perform data-driven test types,

• A considerably higher testing speed than in Selenium IDE.

Selenium RC disadvantages:

• A more complex installation than Selenium IDE,

• Requires the knowledge of programming,

• Requires RC server for operation,

• Slower than its successor WebDriver.

Now Selenium RC has been officially replaced by Selenium WebDriver.

Selenium WebDriver

Selenium WebDriver was created as a fusion of Selenium and WebDriver libraries. This tool is and external *.jar library which combines in itself a given browser driver and a Selenium server. WebDriver provides the user with a ready API that supports interaction with a browser. Selenium WebDriver supports the following programming languages: Java, C#, PHP, Python, Perl, Ruby.

Selenium WebDriver advantages:

• Direct communication with the browser,

• High testing speed,

• Better stability than Selenium RC,

• Support for various browsers.

Selenium WebDriver disadvantages:

• Requires the knowledge of a programming language,

• More difficult installation than Selenium IDE,

• Issues with the support for the latest browsers.

Page Object Pattern

POP is a template in which every page is a separate class. Having one common page model both for programmers and testers is ideologically unacceptable. Testers should design their own page model, independently of developers.

In this way the deliberate and unintended concealment of errors by programmers can be eliminated. A common feature of most POP versions is the fact that classpage accepts a driver in constructor. Every class allows for interaction with it through a clear set of methods. It is not only the advantage of a clear code, but also a possibility to use the fragments prepared in this way in many testing scenarios.

While testing a web application we usually have to do with pages, so it is very convenient to use the Page Object template in order to have the code that refers to one page in one place. Should something change on the page, there is no need to change the testing scenarios, but only to update the object that represents the page. Page Object does not have to represent the whole page, it may refer only to part of it, e.g. page navigation.

This i show we can search for the elements available on the page:

• Unique identifier (id),

• CSS specified class,

• HTML element name,

• Name,

• CSS3 selectors,

• Text or text fragment contained in the element.

WebDriver basic methods

• The driver.get (String url); method takes us to a page specified as an argument. This method waits for the whole page to load.

• While loading a page which reads in a non-standard way (the execution of js onLoad() is not the actual end of page loading).

• The driver.quit(); method closes all open windows in the browser. It is also worth mentioning the driver.close(); method which closes the currently active window in the browser (useful when testing operates on several windows/tabs).

• In order to get page title, use String title = driver.getTitle();.

Apart from WebDriver, another important class is WebElement. A WebElement object is the same as a given page element – some div, text field or any other object.

Selenium GRID

Selenium Grid allows for simultaneous testing on different computers and browsers at the same time. It uses the concept of the hub and nodes. The central point called the hub contains the information on different test platforms (nodes) and assigns them when the test script requires it.

Selenium GRID advantages:

• Simultaneous testing on different hardware and program platforms.

Selenium GRID disadvantages:

• More complex configuration,

• Appropriate infrastructure is required.

Glossary of basic terms

The terms in this section have been taken from the document ‘’Słownik wyrażeń związanych z testowaniem” (Standard Glossary of Terms Used in Software Testing) issued by Stowarzyszenie Jakości Systemów Informatycznych (International Software Testing Qualification Board) in 2013 (Version 2.2.2). In this glossary you will find many more terms used in software testing.

Actual result – the behavior produced/observed when a component or system is tested.

Ah-hoc (exploratory) testing – an informal test design technique where the tester actively controls the design of the tests as those tests are performed and uses information gained while testing to design new and better tests.

Basis test set – a set of test cases derived from the internal structure of a component or specification to ensure that 100% of a specified coverage criterion will be achieved.

Blocked test case – a test case that cannot be executed because the preconditions for its execution are no fulfilled.

Code coverage – an analysis method that determines which parts of the software have been executed (covered) by the test suite and which parts have not been executed, e.g. statement coverage, decision coverage or condition coverage.

Combinatorial testing – a means to identify a suitable subset of test combinations to achieve a predetermined level of coverage when testing an object with multiple parameters and where those parameters themselves each have several values, which gives rise to more combinations than are feasible to test in the time allowed.

Consultative testing – testing driven by the advice and guidance of appropriate experts from outside the test team (e.g., technology experts and/or business domain experts).

Conversion testing – testing of software used to convert data from existing systems for use in replacement systems.

Coverage – the degree, expressed as a percentage, to which a specified coverage item has been exercised by a test suite.

Coverage analysis – measurement of achieved coverage to a specified coverage item during test execution referring to predetermined criteria to determine whether additional testing is required and if so, which test cases are needed.

Debugging – the process of finding, analyzing and removing the causes of failures in software. Debugging is performed by programmers.

Defect – a flaw in a component or system that can cause the component or system to fail to perform its required function, e.g. an incorrect statement or data definition. A defect, if encountered during execution, may cause a failure of the component or system.

Defect injection – the process of intentionally adding defects to a system for the purpose of finding out whether the system can detect, and possibly recover from a defect. Defect injection is intended to mimic failures that might occur in the field.

Defect management – the process of recognizing, investigating, taking action and disposing of defects. It involves recording defects, classifying them and identifying the impact.

Defect masking – an occurrence in which one defect prevents the detection of another.

Documentation testing – testing the quality of the documentation, e.g. user guide or installation guide.

End-to-end testing – testing whether the flow of an application is performing as designed fromstart to finish. The purpose of carrying out end-to-end tests is to confirm that the application meets the end-user’s expectations.

Entry criteria – the set of generic and specific conditions for permitting a process to go forwardwith a defined task, e.g. test phase. The purpose of entry criteria is to prevent a task from starting which would entail more (wasted) effort compared to the effort needed to remove the failed entry criteria.

Error – a human action that produces an incorrect result.

Exit criteria – the set of generic and specific conditions, agreed upon with the stakeholders, for permitting a process to be officially completed. The purpose of exit criteria is to prevent a task from being considered completed when there are still outstanding parts of the task which have not been finished. Exit criteria are used to report against and to plan when to stop testing.

Failure rate – the ratio of the number of failures of a given category to a given unit of measure,e.g. failures per unit of time, failures per number of transactions, failures per number of computer runs.

False-fail result – a test result in which a defect is reported although no such defect actually exists.

False-pass result – a test result which fails to identify the presence of a defect that is actually present in the test object.

Fault density – the number of defects identified in a component or system divided by the size of the component or system (expressed in standard measurement terms, e.g. lines-of-code, number of classes or function points).

Incident – any event occurring that requires investigation.

Incident management – the process of recognizing, investigation, taking action and disposing ofincidents. It involves logging incidents, classifying them and identifying the impact.

Intake test – a special instance of a smoke test to decide if the component or system is ready for detailed and further testing. An intake test is typically carried out at the start of the test execution phase.

Integration – the process of combining components or systems into larger assemblies.

Interface testing – an integration test type that is concerned with testing the interfaces between components or systems.

Monkey testing – testing by means of a random selection from a large range of inputs and by randomly pushing buttons, ignorant on how the product is being used.

Negative testing – tests aimed at showing that a component or system does not work. Negative testing is related to the testers’ attitude rather than a specific test approach or test design technique, e.g. testing with invalid input values or exceptions.

Pair testing – two persons, e.g. two testers, a developer and a tester, or an end-user and a tester, working together to find defects. Typically, they share one computer and trade control of it while testing.

Path coverage – the percentage of paths that have been exercised by a test suite. 100% path coverage implies 100% LCSAJ coverage.

Performance – the degree to which a system or component accomplishes its designated functions within given constraints regarding processing time and throughput rate.

Quality management – coordinated activities to direct and control an organization with regard to quality. Direction and control with regard to quality generally includes the establishment of the quality policy and quality objectives, quality planning, quality control, quality assurance and quality improvement.

Re-testing – testing that runs test cases that failed the last time they were run, in order to verify the success of corrective actions.

Retrospective meeting – a meeting at the end of a project during which the project team members evaluate the project and learn lessons that can be applied to the next project.

Risk – a factor that could result in future negative consequences; usually expressed as impact and likelihood.

Risk analysis – the process of assessing identified risks to estimate their impact and probability of occurrence (likelihood).

Risk management – systematic application of procedures and practices to the tasks of identifying, analyzing, prioritizing, and controlling risk.

Safety – the capability of the software product to achieve acceptable levels of risk of harm to people, business, software, property or the environment in a specified

context of use.

Smoke test – a subset of all defined/planned test cases that cover the main functionality of a component or system, to ascertaining that the most crucial functions of a program work, but not bothering with finer details. A daily build and smoke test is among industry best practices.

Software attack – directed and focused attempt to evaluate the quality, especially reliability, of a test object by attempting to force specific failures to occur.

Software life cycle – the period of time that begins when a software product is conceived and ends when the software is no longer available for use. The software life cycle typically includes a concept phase, requirements phase, design phase, implementation phase, test phase, installation and checkout phase, operation and maintenance phase, and sometimes, retirement phase.

Software quality – the totality of functionality and features of a software product that bear on its ability to satisfy stated or implied needs.

Stability – the capability of the software products to avoid unexpected effects from modifications in the software.

Suitability testing – the process of testing to determine the suitability of a software product for the users’ needs.

Test approach – the implementation of the test strategy for a specific project. It typically includes the decisions made that follow based on the (test) project’s goal and the risk assessment carried out, starting points regarding the test process, the test design techniques to be applied, exit criteria and test types to be performed.

Test case – A set of input values, execution preconditions, expected results and execution postconditions, developed for a particular objective or test condition, such as to exercise a particular program path or to verify compliance with a specific requirement.

Test cycle – execution of the test process against a single identifiable release of the test object.

Test data – data that exists (for example, in a database) before a test is executed, and that affects or is affected by the component or system under test.

Test infrastructure – the organizational artifacts needed to perform testing, consisting of test environments, test tools, office environment and procedures.

Test phase – a distinct set of test activities collected into a manageable phase of a project, e.g. the execution activities of a test level.

Test process – the fundamental test process comprises test planning and control, test analysis and design, test implementation and execution, evaluating exit criteria and reporting, and test closure activities.

Test schedule – a list of activities, tasks or events of the test process, identifying their intended start and finish dates and/or times, and interdependencies.

Test script – commonly used to refer to a test procedure specification, especially an automated one.

Test specification – a document that consist of a test design specification, test case specification and/or test procedure specification.

Testware – artifacts produced during the test process required to plan, design, and execute tests, such as documentation, scripts, inputs, expected results, set-up and clear-up procedures, files, databases, environment, and any additional software or utilities used in testing.

Usability – the capability of the software to be understood, learned, used and attractive to the user when used under specified conditions.

Use case – a sequence of transactions in a dialogue between a user and the system with a tangible result.

Validation – confirmation by examination and through provision of objective evidence that the requirements for a specific intended use for application have been fulfilled.

Published April 1, 2016