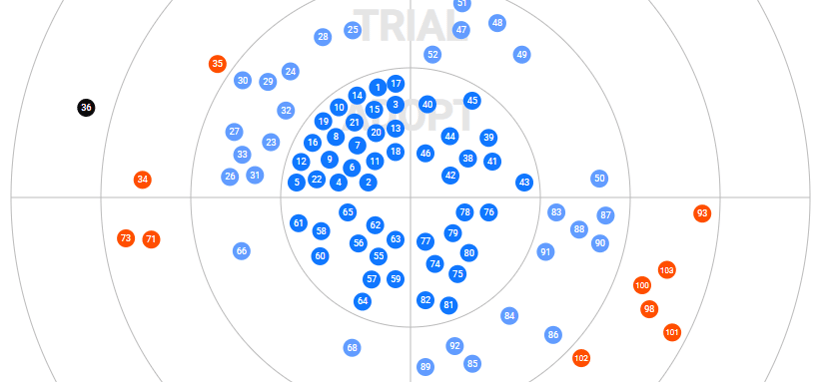

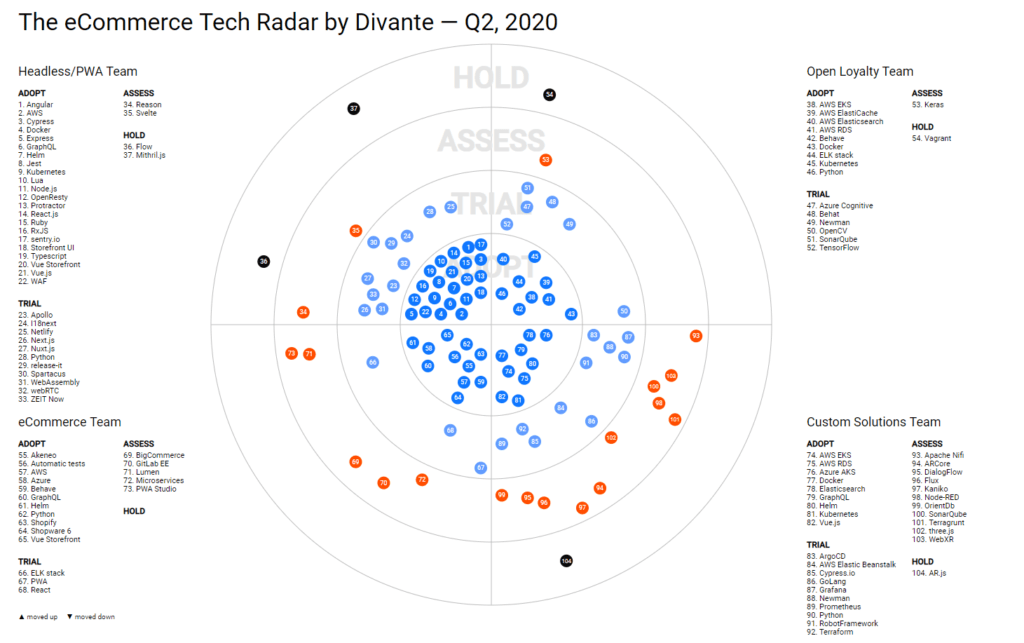

The Divante Technology Radar is our overview of the technologies we use throughout our eCommerce portfolio. We are often get asked how we build our solutions and, as an open-source company, we are always keen to share our secrets. In fact, we decided to take it further by rating each technology and discussing those which we really recommend for building great software products.

You can find out more about the Tech Radar in our first blog post and also read the first interview, with Cezary Olejarczyk, CEO of Open Loyalty, the world’s first open-source loyalty program software.

Today I’m interviewing Kamil Janik, Michał Bolka, Kuba Płaskonka, and Bruno Ramalho from the Custom Solutions team at Divante. I’m hoping to hear some great stories as some of the technologies they’ve put into Divante’s eCommerce Technology Radar are more like science fiction than eCommerce!

Compare all technologies in our live Tech Radar:

View it here

PK: Hello guys! Please introduce yourself, and say something about what Custom Solutions Tribe team is currently working on?

I’m Kuba Płaskonka; I’m a Team Leader in Divante but I have been both a developer and a Technical Lead for over six years here.

My name is Bruno; I joined Divante a year ago and I’m a team leader. I’ve been working in commerce solutions for more than five years. Currently, I’m working on a custom solution for a governmental company.

My name is Michal; I am a Lead Developer. I have been working in Divante for five years with eCommerce, PIM, advisory, and content management systems. I am a co-founder of the open-source Pimcore-Magento connector solution.

I am Kamil; I have been working at Divante for five years. I participated in a number of Magento projects then gained experience with more custom solutions, working in one of our largest B2B projects where I was the co-author of the entire shopping path exchange. The challenges related to working with legacy code and later experience in designing and implementing PIM systems were the basis for what I do now—designing and implementing custom, sometimes even exotic solutions, such as chatbot systems, NLU solutions, or AR technologies. I am always hungry for knowledge and new challenges.

PK: That sounds like a whole range of different applications. What’s the common denominator in the projects you work on?

Team: We have started as a Pimcore Tribe, focused on the Pimcore framework. We soon realized that our developers have a lot of unique skills and they would love to use them in innovative projects. We work in a company that is mostly engaged in commerce projects, so this is our heritage, even as a “Custom Solutions Team”. Most of the projects we work on are somehow connected with commerce. It’s a fast-growing market so, in order to keep pace, businesses must implement cutting-edge technologies. We love to help them do that.

PK: The Custom Solutions team supports the R&D initiatives from the Divante Innovation Lab. There is an awesome video about bots, voice-recognition, and AR. Can you say something more about the R&D projects you’re working on?

Team: As innovation lab members, we are looking for nascent technologies that can bring real value to the eCommerce market. It’s a place where passion meets technology, where people with different interests can do something meaningful. We do things that we always wanted to do but never had time for.

PK: Your Tech Radar is so interesting. For example, you put ARCore in the “Assess” section. How did it get there?

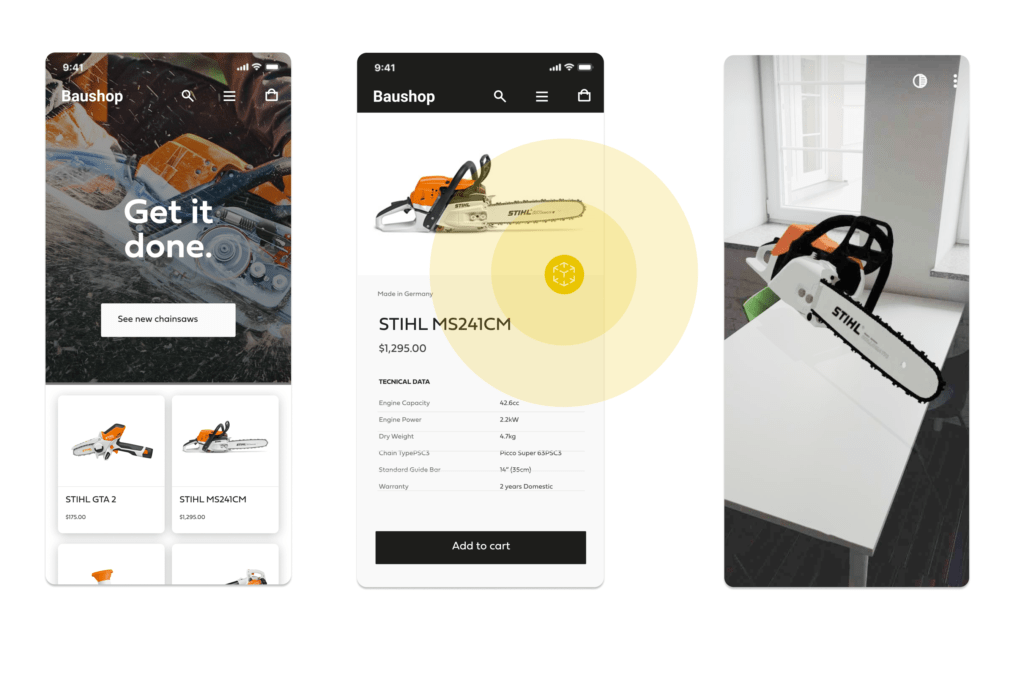

Team: We have noticed on the market that AR creates a new opportunity for our clients. Usually, they already have some 3D models that are not used in eCommerce. There are some implementations of 360° previews but they have no impact on the market. Android ARcore technology allows 3D models to be rendered in Augmented Reality. In the context of products, it is perfect wherever the size of the product, in relation to space, is of great importance. While in web technology the interactivity of this solution is somewhat limited compared to its android version, it allows for extremely fast implementation, and the only challenge is choosing the right 3D model. Customers will be able to see it live in their rooms and houses. The product is in their hands. There are successful implementations of this idea on the market such as IKEA Place or Vyking AR, a solution for the footwear market.

PK: What’s the most challenging task when developing AR for eCommerce?

Team: The freshness of technology itself is a big challenge here. We are talking here about changes that are taking place from month to month. At this moment, Google plans to introduce technologies to the Chrome browser which will allow the rendering of Augmented Reality, without markers, directly in web technology. The documentation is not yet extensive and there are not too many examples of use.

See how the Custom Solutions Team worked with the Divante Innovation Lab to bring AR to the B2B eComm process > Read more about PIMSTAR

PK: Do you need to have some game development background to make these things happen?

Team: Not necessarily, but this experience can be a plus. There are some examples on the market that have pushed this idea to the next level. An example might be full VR eCommerce, where the whole User Experience is pushed to virtual reality. This idea of interactivity was taken from computer games and placed in the new market. Computer games were first in this field and they are a good reference point for what does and does not work for AR and VR.

PK: Is JavaScript performance an issue?

Team: Not particularly. For example, even taking into account AR.js technology, which is not developed as vigorously as the previously mentioned three.js, it can still achieve 60 fps even on older devices. What should be noted is the size of the models. The correct use of cache or compression can help with this challenge as well.

We’re also looking closely at WebAssembly technology. If performance is a problem, we can use c++ or Rust languages to combat this.

PK: WebAssembly. I saw the Quake port once in the browser. It was WebAssembly. How mature is this technology? Is it a real thing for business apps in terms of stability, production readiness, and browser compatibility?

Team: At first glance, WebAssembly seemed like a technology with little to no use for us. However, with the new ideas our team is coming up, it looks like it’s a good option to have in our portfolio. It may be a little too early to use it, as its adoption is low in browsers, but we think it will change very quickly. We are trying to approach it proactively so that, when a new opportunity shows up, we will be ready to use it. Quake, for example, is a good reference point showing that high-performance client-side tasks can be leveraged with the use of WebAssembly.

PK: The next technology I wanted to ask you about is DialogFlow. How do you find it helpful in the projects you’re working on at the moment?

Team: DialogFlow allows you to quickly integrate chat technologies and use advanced models that allow dynamic conversations. In addition, many solutions are already prepared for this, for example, based on Laravel, BotMan. In NLU technologies, we are witnessing a boom: BERT, ELMo, and RoBERTA are among the more advanced and freshest solutions that already open the way to the reusability of pre-trained models. Particular attention should be paid to their relatively simple use with the help of huggingface transformers, which may soon appear on our TechRadar.

PK: For our readers, can you expand on what NLU technologies are?

NLU stands for Natural Language Understanding; it is a subdomain of Natural Language Processing (NLP). It includes processes such as automated reasoning, question answering or text categorization, which are critical to dynamic conversational systems. An interesting application of these systems is to combat so-called fake news. The model can, with a certain probability, determine whether the statement is true in the context of the information on which it was trained.

See an example of the application of NLU. Check the story of OneBot, an AI-powered call center assistant from the Divante Innovation Lab > More about OneBot

PK: The next interesting thing is Node-RED and Apache Nifi. Are these two connected and how do you use it?

Team: Both of them are data flow programming tools. This means we can use graphical interfaces to program how the data is interpreted, transformed, and transmitted to other parts of our system. Using those kinds of tools can be really handy when we want to quickly integrate some systems. Having a GUI is another plus; we can apply changes to our graphical code instantly and even give access to our customers so they can both monitor and enhance the data. This is a new approach to development: no-code development. Thanks to these technologies, we can focus on the key functionalities, not spend weeks trying to connect the whole system infrastructure.

PK: What are the key differences between these tools? Why would I pick one or another?

Team: I think it’s the technology stack and maturity of the solution. Apache NiFi is a well-established tool and uses Java. On the one hand, that means it is stable (our instances of NiFi never crashed on their own), but it may be problematic to expand its functionality. Node-RED uses Javascript, which is really easy to extend and fiddle with. However, it’s also easy to break.

If your problems can be solved with what NiFi supports out of the box, then go for it! If you need a really customized solution and your team knows JS, Node-RED might be more suitable for you. We certainly recommend checking both of these out.

PK: That’s amazing. What are the best and worst parts of it?

Team: In the case of Apache NIFI, It’s really easy to use, super-fast, and optimized for data transmission and safety. On the downside, some complex transformations would be more easily implemented in a few lines of code than in multiple windows. In turn, node-red is not as mature as Apache NIFI, but the speed of use is even higher. It is great for conceptual work, in which checking the data flow and visualization of live flow is important. As the name suggests, it is built using node, which further lowers the entry threshold.

PK: When we move to the “Adopt” section, we’ve got some more “classic” technologies but still I’m excited to learn more about them. For example, tell me more about your GraphQL appliances?

Team: The whole company loves GraphQL: the simplicity of development, the performance, and the freedom that we give to those who are going to integrate with it. During development, we have found out that API adjustments frequently cause a bottleneck in the process of integration of the backend and frontend. GraphQL is a good solution for such issues. As soon as Backend prepares a data model, the frontend can use it for implementation without custom mocking. With Pimcore, we have adopted it to our needs with our own custom extension. Nowadays, when more and more frameworks are implementing it, we can use our knowledge to leverage the potential that comes from GraphQL.

PK: What are the other features of GraphQL you find useful in your projects?

Team: We have noticed the huge integration potential that comes with GraphQL. Creating this type of API makes it much easier for other services to integrate with our code. This improvement allows us to build solutions with which time-to-market is much shorter than with competing products. Documentation can be much smaller and easier to understand. But there is much more. We are playing with possibilities of using GraphQL as a composite API that can connect multiple different solutions with a unified interface, as a facade with additional functionalities or a proxy mechanism. We do not consider other solutions like REST APIs as obsolete; however, we are expanding our toolsets so we can use the one that seems to be most beneficial.

PK: I heard it can be challenging to optimize/cache GraphQL queries? How do you handle this challenge?

Team: With GraphQL optimization, it all boils down to the resolver architecture. Appropriate use of context allows bulk data fetching, without excessive queries. One of the techniques can be using closures instead of classic getters, though optimization methods are limited only by our imagination and experience. GraphQL is flexible enough to allow even the most exotic patterns. However, we should be careful and choose the right solution for the type of queries that will be most often called in the system.

PK: From the same section of the Tech Radar, it seems like your appliances are pretty much cloud-based. You’ve got EKS and Kubernetes, for example. When to use the one and the other?

Team: EKS is a service provided by AWS to manage Kubernetes so, depending on the client, we use EKS when the client requires AWS or Azure AKS. If we are managing the application, we use pure Kubernetes with some help from helm.

PK: Any preference of AKS vs EKS?

Team: For Kubernetes, it is not a big difference. Amazon Web Services is a leader on the cloud-services market but we see the potential that comes from the whole Azure ecosystem. It consists of hundreds of cutting-edge services and technologies. We have started a certification process for our developers in order to use all the benefits that come from that. Usually, our preference is that which most suits our projects and our clients.

PK: Python and Go are in the Trial section. What does it mean? Are you working on some product or just assessing these for some client’s appliances?

Team: We believe that there is a technology that best solves each specific problem. We try to find the best technology and those are just two examples. We also like the simplicity of those two and how easy it is to deploy and run the applications. We are open to new technologies if they bring value to our work. We first saw the potential during development of internal tools that would help us work more efficiently with external time trackers like Toggle or Avaza and Project Management tools like Jira. The next step was to prepare a proof of concept API built with GoLang. We are now in the process of reviewing the best use-cases for each of the technologies, in order to be able to advise our customers.

PK: Maybe this is an obvious question but when do you Python and when do you use Go? And why?

Team: We are really amazed by the performance of Go. At the moment, we are using Go for some internal projects. It’s amazing how fast it is. We consider it as an answer to high-performance, high-availability elements in developed systems like statistical calculations, processing millions of HTTP requests, API, GraphQL, and large files parsers. We use Python in cases where its enormous library of packages can be helpful, e.g. in machine learning, data transformation, and numerical calculations.

PK: How do you assess the popularity of these technologies? Do you want to go deeper into one or the other?

Team: Right now we’re leaning towards Go, even if it’s not as popular as Python. This doesn’t mean we’ll abandon Python altogether. We always try to use the best tools for the problem we face, so the decision about which language for our next big project will depend mostly on the advantages it brings.

PK: I’m looking forward to the updates from you and your team for the upcoming quarters. Anything that you’re assessing at the moment and we might hear about?

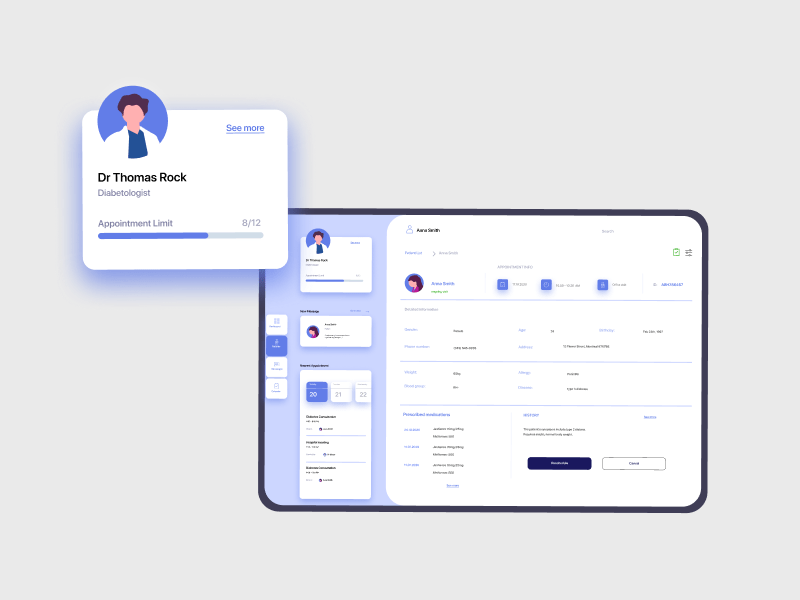

Team: We are working on many ideas at once: for the health industry, small businesses impacted by COVID-19, the furniture industry, and much more. We consider technologies for developers to be like materials for constructors. You cannot consider yourself a versatile constructor if you know only how to build with bricks. Try to make a greenhouse or sports arena with that. The most important is not only how to use a given technology, but when to use it.

PK: Do you want to leave us with one project that people should check out to understand your work?

If you want to see how solutions can have a real impact on everyday lives, check what we’ve prepared for the health industry with the Pharmacy of the Future concept. Health is obviously the big topic in the world right now and it is an area with obstacles to innovation, so we are excited to be making a contribution.

Published May 7, 2020